Biodiv Sci ›› 2023, Vol. 31 ›› Issue (7): 23087. DOI: 10.17520/biods.2023087 cstr: 32101.14.biods.2023087

• Technology and Methodology • Previous Articles Next Articles

Jianmin Cai1, Peiyu He1, Zhipeng Yang2, Luying Li2, Qijun Zhao3, Fan Pan1,*( )

)

Received:2023-03-08

Accepted:2023-06-14

Online:2023-07-20

Published:2023-06-29

Contact:

*E-mail: Jianmin Cai, Peiyu He, Zhipeng Yang, Luying Li, Qijun Zhao, Fan Pan. A deep feature fusion-based method for bird sound recognition and its interpretability analysis[J]. Biodiv Sci, 2023, 31(7): 23087.

| 模型 Model | 参数名称 Parameter name | 参数值 Parameter value |

|---|---|---|

| 深度特征提取网络 Deep feature extraction network | 优化器 Optimizer | Adam |

| 学习率 Learning rate | 0.01 | |

| 时期数 Epochs | 200 | |

| 批大小 Batch size | 16 | |

| 损失函数 Loss function | 分类交叉熵 Categorical_cross-entropy | |

| 分类器 Classifier | 学习率 Learning rate | 0.01 |

| 加速方法 Boosting method | GBDT | |

| 最大深度 Max depth | 4 |

Table 1 Model and model parameter list

| 模型 Model | 参数名称 Parameter name | 参数值 Parameter value |

|---|---|---|

| 深度特征提取网络 Deep feature extraction network | 优化器 Optimizer | Adam |

| 学习率 Learning rate | 0.01 | |

| 时期数 Epochs | 200 | |

| 批大小 Batch size | 16 | |

| 损失函数 Loss function | 分类交叉熵 Categorical_cross-entropy | |

| 分类器 Classifier | 学习率 Learning rate | 0.01 |

| 加速方法 Boosting method | GBDT | |

| 最大深度 Max depth | 4 |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) |

|---|---|---|

| logMel + mobileNetV3 | 90.20 | 90.11 |

| logMel + EfficientNetV2 | 93.08 | 93.20 |

| logMelMAPS + EfficientNetV2 | 95.69 | 95.73 |

| EGeMAPS + EfficientNetV2 | 77.41 | 77.45 |

| LogEGeMAPS + EfficientNetV2 | 91.56 | 91.42 |

| 深度logEGeMAPS + lightGBM Deep logEGeMAPS + lightGBM | 97.13 | 97.12 |

| 深度logMelMAPS + lightGBM Deep logMelMAPS + lightGBM | 98.71 | 98.69 |

| 深度融合特征(mobileNetV3) + lightGBM Deep fusion features (mobileNetV3) + lightGBM | 97.77 | 97.76 |

| 深度融合特征 + SVM Deep fusion features + SVM | 98.83 | 98.82 |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 98.82 | 98.81 |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 98.64 | 98.63 |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 |

Table 2 Benchmark experiment results based on Beijing Bird dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) |

|---|---|---|

| logMel + mobileNetV3 | 90.20 | 90.11 |

| logMel + EfficientNetV2 | 93.08 | 93.20 |

| logMelMAPS + EfficientNetV2 | 95.69 | 95.73 |

| EGeMAPS + EfficientNetV2 | 77.41 | 77.45 |

| LogEGeMAPS + EfficientNetV2 | 91.56 | 91.42 |

| 深度logEGeMAPS + lightGBM Deep logEGeMAPS + lightGBM | 97.13 | 97.12 |

| 深度logMelMAPS + lightGBM Deep logMelMAPS + lightGBM | 98.71 | 98.69 |

| 深度融合特征(mobileNetV3) + lightGBM Deep fusion features (mobileNetV3) + lightGBM | 97.77 | 97.76 |

| 深度融合特征 + SVM Deep fusion features + SVM | 98.83 | 98.82 |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 98.82 | 98.81 |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 98.64 | 98.63 |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| GWO-KELM | 91.16 | 88.54 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| LogMel + CRNN | 92.89 | 89.64 | Adavanne et al, |

| LogMel + CNN | 91.12 | 88.47 | Bold et al, |

| logMel + DSRN + DilatedSAM + BiLSTM | 96.58 | 96.51 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 | 本文 This study |

Table 3 Comparison of different model experimental results based on Beijing Bird dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| GWO-KELM | 91.16 | 88.54 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| LogMel + CRNN | 92.89 | 89.64 | Adavanne et al, |

| LogMel + CNN | 91.12 | 88.47 | Bold et al, |

| logMel + DSRN + DilatedSAM + BiLSTM | 96.58 | 96.51 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 | 本文 This study |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| logMel + SVM | 93.96 | - | Salamon et al, |

| 深度特征-1-2-3 + 最小最大归一化 + KNN Deep fusion feature-1-2-3 + Max-Min Normalization + KNN | 93.89 | - | 吉训生等, |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.32 | 98.04 | 本文 This study |

Table 4 Comparison of different model experimental results based on CLO-43S dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| logMel + SVM | 93.96 | - | Salamon et al, |

| 深度特征-1-2-3 + 最小最大归一化 + KNN Deep fusion feature-1-2-3 + Max-Min Normalization + KNN | 93.89 | - | 吉训生等, |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.32 | 98.04 | 本文 This study |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| BirdCLEF2022比赛public最优 BirdCLEF2022 competition optimal model in public dataset | - | 91.28 | https://www.kaggle.com/competitions/birdclef-2022/leaderboard?t ab=public |

| 深度logMelMAPS + lightGBM Deep logEGeMAPS + lightGBM | 88.53 | 88.32 | 本文 This study |

| 深度融合特征 + SVM Deep fusion features + SVM | 89.40 | 89.22 | 本文 This study |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 85.47 | 84.77 | 本文 This study |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 85.71 | 85.47 | 本文 This study |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 91.12 | 91.05 | 本文 This study |

Table 5 Comparison of different model experimental results based on BirdCLEF2022 competition dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| BirdCLEF2022比赛public最优 BirdCLEF2022 competition optimal model in public dataset | - | 91.28 | https://www.kaggle.com/competitions/birdclef-2022/leaderboard?t ab=public |

| 深度logMelMAPS + lightGBM Deep logEGeMAPS + lightGBM | 88.53 | 88.32 | 本文 This study |

| 深度融合特征 + SVM Deep fusion features + SVM | 89.40 | 89.22 | 本文 This study |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 85.47 | 84.77 | 本文 This study |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 85.71 | 85.47 | 本文 This study |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 91.12 | 91.05 | 本文 This study |

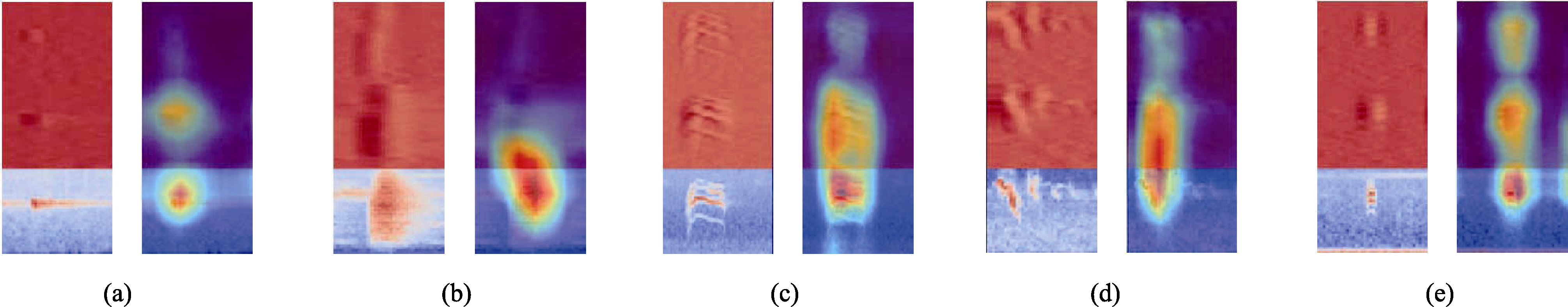

Fig. 3 Class activation maps of five bird song types. a-e represent the logMelMAPS of western coconuts (constant frequency), heron (broadband with varying frequency components), Eurasian eagle (strong harmonics), sparrow (frequency modulated whistles), black-winged sandpiper (broadband pulses) and their corresponding class activation map, respectively. Each subfigure on the left side represents the logMelMAPS feature map, which is composed of logMel spectrogram, logMel first-order differential spectrogram, and logMel second-order differential spectrogram from bottom to top. The horizontal axis represents time, and the vertical axis represents frequency. On the right side of each subfigure, the corresponding class activation map for logMelMAPS is displayed, where darker colors indicate that the neural network pays more attention to that particular region.

| [1] | Adavanne S, Drossos K, Cakir E, Virtanen T (2017) Stacked convolutional and recurrent neural networks for bird audio detection. In:Proceedings of the 25th European Signal Processing Conference (EUSIPCO), Greek Island, Greece. |

| [2] | Bold N, Zhang C, Akashi T (2019) Cross-domain deep feature combination for bird species classification with audio-visual data. IEICE Transactions on Information and Systems, 102, 2033-2042. |

| [3] | Brandes TS (2008) Automated sound recording and analysis techniques for bird surveys and conservation. Bird Conservation International, 18, S163-S173. |

| [4] | Dan S (2022) Computational bioacoustics with deep learning: A review and roadmap. PeerJ, 10, e13152. |

| [5] |

Eyben F, Scherer KR, Schuller BW, Sundberg J, André E, Busso C, Devillers LY, Epps J, Laukka P, Narayanan SS, Truong KP (2015) The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Transactions on Affective Computing, 7, 190-202.

DOI URL |

| [6] | Eyben F, Wöllmer M, Schuller B (2010) Opensmile:The Munich versatile and fast open-source audio feature extractor. In: Proceedings of the 18th ACM International Conference on Multimedia (eds del Bimbo A, Chang SF), pp. 1459-1462. Association for Computing Machinery, New York. |

| [7] |

Gupta G, Kshirsagar M, Zhong M, Gholami S, Ferres JL (2021) Comparing recurrent convolutional neural networks for large scale bird species classification. Scientific Reports, 11, 17085.

DOI PMID |

| [8] | Ji XS, Jiang K, Xie J (2022) Deep feature fusion of multi-dimensional neural network for bird call recognition. Journal of Signal Processing, 38, 844-853. (in Chinese with English abstract) |

| [吉训生, 江昆, 谢捷 (2022) 基于多维神经网络深度特征融合的鸟鸣识别算法. 信号处理, 38, 844-853.] | |

| [9] | Ke GL, Meng Q, Finley T, Wang TF, Chen W, Ma WD, Ye QW, Liu TY (2017) LightGBM:A highly efficient gradient boosting decision tree. In: NIPS'17: Proceedings of the 31st International Conference on Neural Information Processing Systems (eds von Luxburg U, Guyon I, Bengio S, Wallach H, Fergus R), pp. 3149-3157. Curran Associates Inc., New York. |

| [10] | Li HC, Yang DW, Wen ZF, Wang YN, Chen AB (2022) Inception-CSA deep learning model-based classification of bird sounds. Journal of Huazhong Agricultural University, 42(3), 97-104. (in Chinese with English abstract) |

| [李怀城, 杨道武, 温治芳, 王亚楠, 陈爱斌 (2022) 基于Inception- CSA深度学习模型的鸟鸣分类. 华中农业大学学报, 42(3), 97-104.] | |

| [11] | Salamon J, Bello JP, Farnsworth A, Robbins M, Keen S, Klinck H, Kelling S (2016) Towards the automatic classification of avian flight calls for bioacoustic monitoring. PLoS ONE, 11, e0166866. |

| [12] | Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-CAM:Visual explanations from deep networks via gradient-based localization. In:2017 IEEE International Conference on Computer Vision (ICCV) (ed.ed. O'Conner L), pp. 618-626. IEEE Computer Society Customer Service Center, California. |

| [13] | Sprengel E, Jaggi M, Kilcher Y, Hofmann T (2016) Audio based bird species identification using deep learning techniques. In: Conference and Labs of the Evaluation Forum (CLEF) 2016, pp. 547-559. Évora, Portugal. |

| [14] | Stowell D (2022) Computational bioacoustics with deep learning: A review and roadmap. PeerJ, e13152. |

| [15] |

Tan Mingxing, Le Quoc (2021) EfficientNetV2: Smaller models and faster training. arXiv e-prints, doi: 10.48550/ arXiv.2104.00298.

DOI |

| [16] |

Xie J, Zhu MY (2019) Handcrafted features and late fusion with deep learning for bird sound classification. Ecological Informatics, 52, 74-81.

DOI URL |

| [17] |

Yan N, Chen AB, Zhou GX, Zhang ZQ, Liu XY, Wang JW, Liu ZH, Chen WJ (2021) Birdsong classification based on multi-feature fusion. Multimedia Tools and Applications, 80, 36529-36547.

DOI |

| [18] |

Zhang FY, Zhang LY, Chen HX, Xie JJ (2021) Bird species identification using spectrogram based on multi-channel fusion of DCNNs. Entropy, 23, 1507.

DOI URL |

| [1] | Yongcai Wang, Huawei Wan, Jixi Gao, Zhuowei Hu, Chenxi Sun, Na Lü, Zhiru Zhang. Identification of common native grassland plants in northern China using deep learning [J]. Biodiv Sci, 2024, 32(4): 23435-. |

| [2] | Qun Xu, Yonghua Xie. Automatic individual tracking method of Amur tiger based on attention mechanism fusion of multiple features [J]. Biodiv Sci, 2024, 32(3): 23409-. |

| [3] | Jiangjian Xie, Chen Shen, Feiyu Zhang, Zhishu Xiao. Cross-regional bird species recognition method integrating audio and ecological niche information [J]. Biodiv Sci, 2024, 32(10): 24259-. |

| [4] | Qianrong Guo, Shufei Duan, Jie Xie, Xueyan Dong, Zhishu Xiao. Advances in bird sound annotation methods for passive acoustic monitoring [J]. Biodiv Sci, 2024, 32(10): 24313-. |

| [5] | Wantao Huang, Zezhou Hao, Zixin Zhang, Zhishu Xiao, Chengyun Zhang. A comparison of bird sound recognition performance among acoustic recorders [J]. Biodiv Sci, 2024, 32(10): 24273-. |

| [6] | Yufei Huang, Chunyan Lu, Mingming Jia, Zili Wang, Yue Su, Yanlin Su. Plant species classification of coastal wetlands based on UAV images and object- oriented deep learning [J]. Biodiv Sci, 2023, 31(3): 22411-. |

| [7] | Xiaohu Shen, Xiangyu Zhu, Hongfei Shi, Chuanzhi Wang. Research progress of birdsong recognition algorithms based on machine learning [J]. Biodiv Sci, 2023, 31(11): 23272-. |

| [8] | Zhuofan Xie, Dingzhao Li, Haixin Sun, Anmin Zhang. Deep learning techniques for bird chirp recognition task [J]. Biodiv Sci, 2023, 31(1): 22308-. |

| [9] | Yan Xu, Congling Zhang, Ruijiao Jiang, Zifei Wang, Mengchen Zhu, Guochun Shen. UAV-based hyperspectral images and monitoring of canopy tree diversity [J]. Biodiv Sci, 2021, 29(5): 647-660. |

| Viewed | ||||||

|

Full text |

|

|||||

|

Abstract |

|

|||||

Copyright © 2022 Biodiversity Science

Editorial Office of Biodiversity Science, 20 Nanxincun, Xiangshan, Beijing 100093, China

Tel: 010-62836137, 62836665 E-mail: biodiversity@ibcas.ac.cn