生物多样性 ›› 2023, Vol. 31 ›› Issue (7): 23087. DOI: 10.17520/biods.2023087 cstr: 32101.14.biods.2023087

蔡建民1, 何培宇1, 杨智鹏2, 李露莹2, 赵启军3, 潘帆1,*( )

)

收稿日期:2023-03-08

接受日期:2023-06-14

出版日期:2023-07-20

发布日期:2023-06-29

通讯作者:

*E-mail: 作者简介:*E-mail: panfan@scu.edu.cn基金资助:

Jianmin Cai1, Peiyu He1, Zhipeng Yang2, Luying Li2, Qijun Zhao3, Fan Pan1,*( )

)

Received:2023-03-08

Accepted:2023-06-14

Online:2023-07-20

Published:2023-06-29

Contact:

*E-mail: 摘要:

鸟鸣识别是生态监测的重要手段, 为进一步提升鸟鸣识别的准确性和鲁棒性, 本文提出了1种新的基于深度特征融合的鸟鸣识别方法。该方法首先利用深度特征提取网络对鸟鸣的对数梅尔谱图和补充特征集的深度特征进行提取, 再将两种深度特征进行融合, 最后使用轻量级梯度提升机(light gradient boosting machine, lightGBM)分类器进行分类。本文充分利用深度神经网络的特征提取能力以及lightGBM的分类性能, 将特征提取和特征分类过程进行分离, 从而实现了高准确率的鸟鸣识别。实验结果显示, 本文提出的方法在北京百鸟数据集中取得了目前已知的最佳结果, 模型的平均准确率达到了98.70%, 平均F1分数达到了98.84%。相比传统方法, 深度融合特征在鸟鸣识别任务上准确率提升了5.62%以上。同时, 引入的lightGBM分类器使分类准确率提升了3.02%。此外, 在CLO-43SD和BirdCLEF2022比赛的数据集中, 本文方法也展现出卓越的性能, 分别取得了98.32%和91.12%的平均准确率。本文还引入了类激活图对不同类型鸟鸣的识别结果进行可解释性分析, 揭示了神经网络对不同类型鸟鸣的注意力区域差异, 为后续的特征选择和模型优化提供了理论依据。研究结果表明, 本文方法有效提高了鸟鸣识别的准确率, 在3个数据集的测试中均展现出较好的性能, 能够为基于鸟鸣识别的生态监测提供有力的技术支撑。

蔡建民, 何培宇, 杨智鹏, 李露莹, 赵启军, 潘帆 (2023) 基于深度特征融合的鸟鸣识别方法及其可解释性分析. 生物多样性, 31, 23087. DOI: 10.17520/biods.2023087.

Jianmin Cai, Peiyu He, Zhipeng Yang, Luying Li, Qijun Zhao, Fan Pan (2023) A deep feature fusion-based method for bird sound recognition and its interpretability analysis. Biodiversity Science, 31, 23087. DOI: 10.17520/biods.2023087.

| 模型 Model | 参数名称 Parameter name | 参数值 Parameter value |

|---|---|---|

| 深度特征提取网络 Deep feature extraction network | 优化器 Optimizer | Adam |

| 学习率 Learning rate | 0.01 | |

| 时期数 Epochs | 200 | |

| 批大小 Batch size | 16 | |

| 损失函数 Loss function | 分类交叉熵 Categorical_cross-entropy | |

| 分类器 Classifier | 学习率 Learning rate | 0.01 |

| 加速方法 Boosting method | GBDT | |

| 最大深度 Max depth | 4 |

表1 模型及其参数列表

Table 1 Model and model parameter list

| 模型 Model | 参数名称 Parameter name | 参数值 Parameter value |

|---|---|---|

| 深度特征提取网络 Deep feature extraction network | 优化器 Optimizer | Adam |

| 学习率 Learning rate | 0.01 | |

| 时期数 Epochs | 200 | |

| 批大小 Batch size | 16 | |

| 损失函数 Loss function | 分类交叉熵 Categorical_cross-entropy | |

| 分类器 Classifier | 学习率 Learning rate | 0.01 |

| 加速方法 Boosting method | GBDT | |

| 最大深度 Max depth | 4 |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) |

|---|---|---|

| logMel + mobileNetV3 | 90.20 | 90.11 |

| logMel + EfficientNetV2 | 93.08 | 93.20 |

| logMelMAPS + EfficientNetV2 | 95.69 | 95.73 |

| EGeMAPS + EfficientNetV2 | 77.41 | 77.45 |

| LogEGeMAPS + EfficientNetV2 | 91.56 | 91.42 |

| 深度logEGeMAPS + lightGBM Deep logEGeMAPS + lightGBM | 97.13 | 97.12 |

| 深度logMelMAPS + lightGBM Deep logMelMAPS + lightGBM | 98.71 | 98.69 |

| 深度融合特征(mobileNetV3) + lightGBM Deep fusion features (mobileNetV3) + lightGBM | 97.77 | 97.76 |

| 深度融合特征 + SVM Deep fusion features + SVM | 98.83 | 98.82 |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 98.82 | 98.81 |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 98.64 | 98.63 |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 |

表2 基于北京百鸟数据集的基准测试实验结果(最佳结果加粗显示)

Table 2 Benchmark experiment results based on Beijing Bird dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) |

|---|---|---|

| logMel + mobileNetV3 | 90.20 | 90.11 |

| logMel + EfficientNetV2 | 93.08 | 93.20 |

| logMelMAPS + EfficientNetV2 | 95.69 | 95.73 |

| EGeMAPS + EfficientNetV2 | 77.41 | 77.45 |

| LogEGeMAPS + EfficientNetV2 | 91.56 | 91.42 |

| 深度logEGeMAPS + lightGBM Deep logEGeMAPS + lightGBM | 97.13 | 97.12 |

| 深度logMelMAPS + lightGBM Deep logMelMAPS + lightGBM | 98.71 | 98.69 |

| 深度融合特征(mobileNetV3) + lightGBM Deep fusion features (mobileNetV3) + lightGBM | 97.77 | 97.76 |

| 深度融合特征 + SVM Deep fusion features + SVM | 98.83 | 98.82 |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 98.82 | 98.81 |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 98.64 | 98.63 |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| GWO-KELM | 91.16 | 88.54 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| LogMel + CRNN | 92.89 | 89.64 | Adavanne et al, |

| LogMel + CNN | 91.12 | 88.47 | Bold et al, |

| logMel + DSRN + DilatedSAM + BiLSTM | 96.58 | 96.51 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 | 本文 This study |

表3 基于北京百鸟数据集的不同模型实验结果对比(加粗显示最佳结果)

Table 3 Comparison of different model experimental results based on Beijing Bird dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| GWO-KELM | 91.16 | 88.54 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| LogMel + CRNN | 92.89 | 89.64 | Adavanne et al, |

| LogMel + CNN | 91.12 | 88.47 | Bold et al, |

| logMel + DSRN + DilatedSAM + BiLSTM | 96.58 | 96.51 | 李大鹏, 2022①① 李大鹏 (2022) 自然场景下鸟鸣声识别算法研究, 硕士学位论文, 南京信息工程大学, 南京.) |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.70 | 98.82 | 本文 This study |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| logMel + SVM | 93.96 | - | Salamon et al, |

| 深度特征-1-2-3 + 最小最大归一化 + KNN Deep fusion feature-1-2-3 + Max-Min Normalization + KNN | 93.89 | - | 吉训生等, |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.32 | 98.04 | 本文 This study |

表4 基于CLO-43S数据集的不同模型实验结果对比(最佳结果加粗显示)

Table 4 Comparison of different model experimental results based on CLO-43S dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| logMel + SVM | 93.96 | - | Salamon et al, |

| 深度特征-1-2-3 + 最小最大归一化 + KNN Deep fusion feature-1-2-3 + Max-Min Normalization + KNN | 93.89 | - | 吉训生等, |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 98.32 | 98.04 | 本文 This study |

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| BirdCLEF2022比赛public最优 BirdCLEF2022 competition optimal model in public dataset | - | 91.28 | https://www.kaggle.com/competitions/birdclef-2022/leaderboard?t ab=public |

| 深度logMelMAPS + lightGBM Deep logEGeMAPS + lightGBM | 88.53 | 88.32 | 本文 This study |

| 深度融合特征 + SVM Deep fusion features + SVM | 89.40 | 89.22 | 本文 This study |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 85.47 | 84.77 | 本文 This study |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 85.71 | 85.47 | 本文 This study |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 91.12 | 91.05 | 本文 This study |

表5 基于BirdCLEF 2022比赛数据集的不同模型实验结果对比(加粗显示最佳结果)

Table 5 Comparison of different model experimental results based on BirdCLEF2022 competition dataset (The best result is highlighted in bold)

| 模型 Model | 平均准确率 Average accuracy (%) | 平均F1分数 Average F1-score (%) | 参考文献 Reference |

|---|---|---|---|

| BirdCLEF2022比赛public最优 BirdCLEF2022 competition optimal model in public dataset | - | 91.28 | https://www.kaggle.com/competitions/birdclef-2022/leaderboard?t ab=public |

| 深度logMelMAPS + lightGBM Deep logEGeMAPS + lightGBM | 88.53 | 88.32 | 本文 This study |

| 深度融合特征 + SVM Deep fusion features + SVM | 89.40 | 89.22 | 本文 This study |

| 深度融合特征 + Random Forest Deep fusion features + Random Forest | 85.47 | 84.77 | 本文 This study |

| 深度融合特征 + XGBoost Deep fusion features + XGBoost | 85.71 | 85.47 | 本文 This study |

| 深度融合特征 + lightGBM Deep fusion features + lightGBM | 91.12 | 91.05 | 本文 This study |

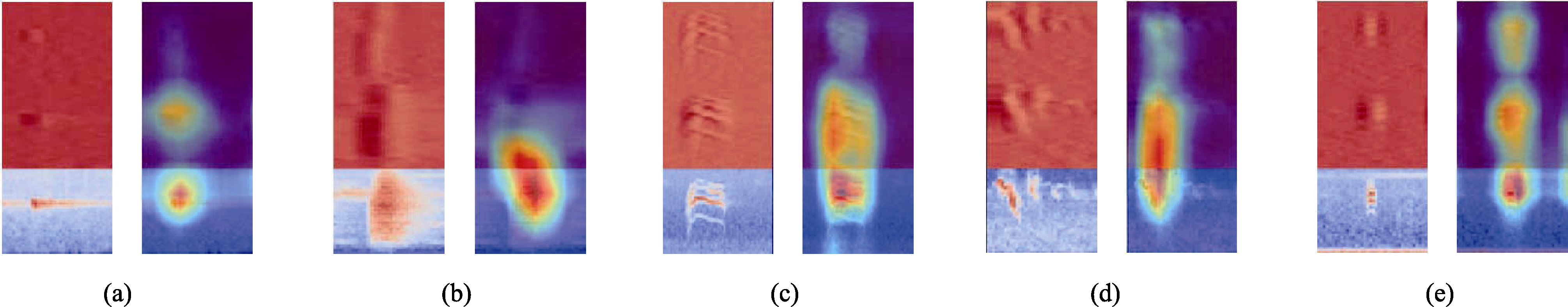

图3 5种鸟鸣类型类激活图。a-e分别表示西方秧鸡(单频类型)、苍鹭(类噪声类型)、欧亚鵟(强谐波类型)、麻雀(调频类型)、黑翅长脚鹬(宽带脉冲类型)的logMelMAPS及其对应的类激活图。每幅子图左侧表示logMelMAPS特征图, 从下至上由logMel谱图、logMel一阶差分图和logMel二阶差分图拼接而成, 横向表示时间, 纵向表示频率; 每幅子图右侧则表示logMelMAPS对应的类激活图, 右侧颜色越深表明神经网络对该区域越关注。

Fig. 3 Class activation maps of five bird song types. a-e represent the logMelMAPS of western coconuts (constant frequency), heron (broadband with varying frequency components), Eurasian eagle (strong harmonics), sparrow (frequency modulated whistles), black-winged sandpiper (broadband pulses) and their corresponding class activation map, respectively. Each subfigure on the left side represents the logMelMAPS feature map, which is composed of logMel spectrogram, logMel first-order differential spectrogram, and logMel second-order differential spectrogram from bottom to top. The horizontal axis represents time, and the vertical axis represents frequency. On the right side of each subfigure, the corresponding class activation map for logMelMAPS is displayed, where darker colors indicate that the neural network pays more attention to that particular region.

| [1] | Adavanne S, Drossos K, Cakir E, Virtanen T (2017) Stacked convolutional and recurrent neural networks for bird audio detection. In:Proceedings of the 25th European Signal Processing Conference (EUSIPCO), Greek Island, Greece. |

| [2] | Bold N, Zhang C, Akashi T (2019) Cross-domain deep feature combination for bird species classification with audio-visual data. IEICE Transactions on Information and Systems, 102, 2033-2042. |

| [3] | Brandes TS (2008) Automated sound recording and analysis techniques for bird surveys and conservation. Bird Conservation International, 18, S163-S173. |

| [4] | Dan S (2022) Computational bioacoustics with deep learning: A review and roadmap. PeerJ, 10, e13152. |

| [5] |

Eyben F, Scherer KR, Schuller BW, Sundberg J, André E, Busso C, Devillers LY, Epps J, Laukka P, Narayanan SS, Truong KP (2015) The Geneva minimalistic acoustic parameter set (GeMAPS) for voice research and affective computing. IEEE Transactions on Affective Computing, 7, 190-202.

DOI URL |

| [6] | Eyben F, Wöllmer M, Schuller B (2010) Opensmile:The Munich versatile and fast open-source audio feature extractor. In: Proceedings of the 18th ACM International Conference on Multimedia (eds del Bimbo A, Chang SF), pp. 1459-1462. Association for Computing Machinery, New York. |

| [7] |

Gupta G, Kshirsagar M, Zhong M, Gholami S, Ferres JL (2021) Comparing recurrent convolutional neural networks for large scale bird species classification. Scientific Reports, 11, 17085.

DOI PMID |

| [8] | Ji XS, Jiang K, Xie J (2022) Deep feature fusion of multi-dimensional neural network for bird call recognition. Journal of Signal Processing, 38, 844-853. (in Chinese with English abstract) |

| [吉训生, 江昆, 谢捷 (2022) 基于多维神经网络深度特征融合的鸟鸣识别算法. 信号处理, 38, 844-853.] | |

| [9] | Ke GL, Meng Q, Finley T, Wang TF, Chen W, Ma WD, Ye QW, Liu TY (2017) LightGBM:A highly efficient gradient boosting decision tree. In: NIPS'17: Proceedings of the 31st International Conference on Neural Information Processing Systems (eds von Luxburg U, Guyon I, Bengio S, Wallach H, Fergus R), pp. 3149-3157. Curran Associates Inc., New York. |

| [10] | Li HC, Yang DW, Wen ZF, Wang YN, Chen AB (2022) Inception-CSA deep learning model-based classification of bird sounds. Journal of Huazhong Agricultural University, 42(3), 97-104. (in Chinese with English abstract) |

| [李怀城, 杨道武, 温治芳, 王亚楠, 陈爱斌 (2022) 基于Inception- CSA深度学习模型的鸟鸣分类. 华中农业大学学报, 42(3), 97-104.] | |

| [11] | Salamon J, Bello JP, Farnsworth A, Robbins M, Keen S, Klinck H, Kelling S (2016) Towards the automatic classification of avian flight calls for bioacoustic monitoring. PLoS ONE, 11, e0166866. |

| [12] | Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-CAM:Visual explanations from deep networks via gradient-based localization. In:2017 IEEE International Conference on Computer Vision (ICCV) (ed.ed. O'Conner L), pp. 618-626. IEEE Computer Society Customer Service Center, California. |

| [13] | Sprengel E, Jaggi M, Kilcher Y, Hofmann T (2016) Audio based bird species identification using deep learning techniques. In: Conference and Labs of the Evaluation Forum (CLEF) 2016, pp. 547-559. Évora, Portugal. |

| [14] | Stowell D (2022) Computational bioacoustics with deep learning: A review and roadmap. PeerJ, e13152. |

| [15] |

Tan Mingxing, Le Quoc (2021) EfficientNetV2: Smaller models and faster training. arXiv e-prints, doi: 10.48550/ arXiv.2104.00298.

DOI |

| [16] |

Xie J, Zhu MY (2019) Handcrafted features and late fusion with deep learning for bird sound classification. Ecological Informatics, 52, 74-81.

DOI URL |

| [17] |

Yan N, Chen AB, Zhou GX, Zhang ZQ, Liu XY, Wang JW, Liu ZH, Chen WJ (2021) Birdsong classification based on multi-feature fusion. Multimedia Tools and Applications, 80, 36529-36547.

DOI |

| [18] |

Zhang FY, Zhang LY, Chen HX, Xie JJ (2021) Bird species identification using spectrogram based on multi-channel fusion of DCNNs. Entropy, 23, 1507.

DOI URL |

| [1] | 王永财, 万华伟, 高吉喜, 胡卓玮, 孙晨曦, 吕娜, 张志如. 基于深度学习的我国北方常见天然草地植物识别[J]. 生物多样性, 2024, 32(4): 23435-. |

| [2] | 许群, 谢永华. 基于注意力机制融合多特征的东北虎个体自动跟踪方法[J]. 生物多样性, 2024, 32(3): 23409-. |

| [3] | 谢将剑, 沈忱, 张飞宇, 肖治术. 融合音频及生态位信息的跨地域鸟类物种识别方法[J]. 生物多样性, 2024, 32(10): 24259-. |

| [4] | 黄万涛, 郝泽周, 张梓欣, 肖治术, 张承云. 被动声学监测设备性能比较及对鸟声识别的影响[J]. 生物多样性, 2024, 32(10): 24273-. |

| [5] | 黄雨菲, 路春燕, 贾明明, 王自立, 苏越, 苏艳琳. 基于无人机影像与面向对象-深度学习的滨海湿地植物物种分类[J]. 生物多样性, 2023, 31(3): 22411-. |

| [6] | 申小虎, 朱翔宇, 史洪飞, 王传之. 基于机器学习鸟声识别算法研究进展[J]. 生物多样性, 2023, 31(11): 23272-. |

| [7] | 谢卓钒, 李鼎昭, 孙海信, 张安民. 面向鸟鸣声识别任务的深度学习技术[J]. 生物多样性, 2023, 31(1): 22308-. |

| [8] | 徐岩, 张聪伶, 降瑞娇, 王子斐, 朱梦晨, 沈国春. 无人机高光谱影像与冠层树种多样性监测[J]. 生物多样性, 2021, 29(5): 647-660. |

| 阅读次数 | ||||||

|

全文 |

|

|||||

|

摘要 |

|

|||||

备案号:京ICP备16067583号-7

Copyright © 2022 版权所有 《生物多样性》编辑部

地址: 北京香山南辛村20号, 邮编:100093

电话: 010-62836137, 62836665 E-mail: biodiversity@ibcas.ac.cn

![]()